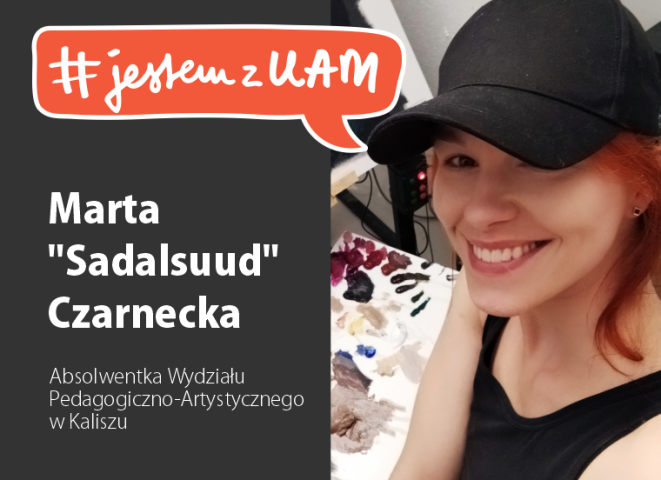

![]() #jestemzUAM: Marta "Sadalsuud" Czarnecka

#jestemzUAM: Marta "Sadalsuud" CzarneckaArtystka, malarka, absolwentka Wydziału Pedagogiczno-Artystycznego w Kaliszu

![]() Prof. Bogumiła Kaniewska wybrana na drugą kadencję

Prof. Bogumiła Kaniewska wybrana na drugą kadencjęRektorem UAM na kadencję 2024-2028 została prof. dr hab. Bogumiła Kaniewska.

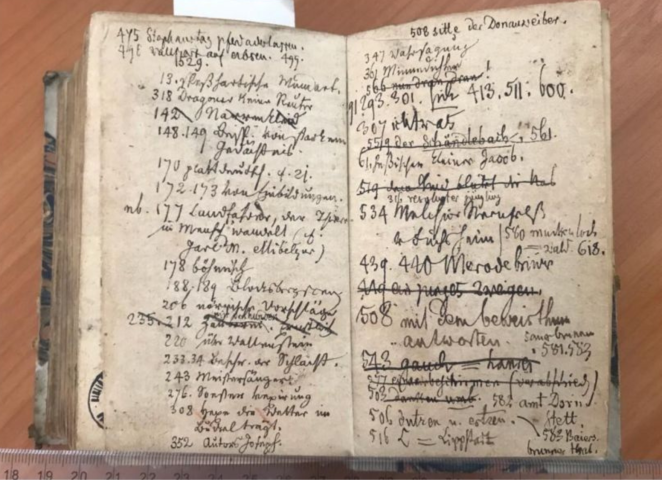

![]() Odkrycie w Bibliotece Uniwersyteckiej w Poznaniu

Odkrycie w Bibliotece Uniwersyteckiej w Poznaniu27 nowych pozycji z prywatnej biblioteki braci Grimm odnalezionych w BUP

![]() Frontiers Planet Prize

Frontiers Planet PrizeProf. Michał Bogdziewicz Krajowym Mistrzem w międzynarodowym konkursie Frontiers Planet Prize 2024

- #jestemzUAM: Marta "Sadalsuud" CzarneckaArtystka, malarka, absolwentka Wydziału Pedagogicz

- Prof. Bogumiła Kaniewska wybrana na drugą kadencjęRektorem UAM na kadencję 2024-2028 została

- Odkrycie w Bibliotece Uniwersyteckiej w Poznaniu27 nowych pozycji z prywatnej biblioteki braci

- Frontiers Planet Prize 2024Prof. Michał Bogdziewicz został Krajowym Mistrzem

.

.

Najważniejsze wiadomości

-

![]()

Szkoła Letnia BESTStudentCAMP 2024

Trwa nabór wniosków do konkursu BESTStudentCAMP 2024, którego celem jest wsparcie studentów na początkowym etapie studiów poprzez wyposażenie ich w umiejętności badawcze i kompetencje miękkie przydatne w pracy naukowej.

-

![]()

Ogłoszenie wyników VII Uczelnianego Konkursu Plastycznego UAM

Znamy laureatów VII Uczelnianego Konkursu Plastycznego UAM im. Piotra Wójciaka z cyklu „Studenckie impresje”, organizowanego w ramach XXVII Poznańskiego Festiwalu Nauki i Sztuki.

-

![]()

Spotkanie dla kandydatów zagranicznych

8 maja zapraszamy na spotkanie informacyjne dotyczące rekrutacji na studia dla kandydatów zagranicznych.

-

![]()

Konkurs recytatorski wierszy Czesława Miłosza

Zapraszamy uczniów, studentów i dorosłych do udziału w uniwersyteckim konkursie recytatorskim.

-

![]()

UAM wśród uczelni z największą liczbą publikacji naukowych

UAM znalazł się wśród trzech instytucji, które w latach 2017–2021 zgłaszały najwięcej publikacji do Polskiej Bibliografii Naukowej.

Filmy

-

![Uruchom film]()

Wyniki wyborów na Rektora Uniwersytetu im. Adama Mickiewicza w Poznaniu

-

![11 kwietnia odbył się Międzynarodowy Dzień Uniwersytetów Dziecięcych na UAM. O idei tego dnia opowiada dr Renata Popiołek, koordynatorka projektu Kolorowy Uniwersytet.]()

Międzynarodowy Dzień Uniwersytetów Dziecięcych na UAM

-

![Uruchom film]()

Uniwersytecka Synergia Edukacyjna UAM. Spotkanie trzecie: specjalne potrzeby edukacyjne

-

![Uruchom film]()

Uczeni Uniwersytetu Poznańskiego wobec kwestii żydowskiej w okresie dwudziestolecia międzywojennego

-

![22-23 marca 2024 r. na Wydziale Historii UAM odbyły się obchody Dnia Czytania Tolkiena, zorganizowane we współpracy z Drużyną Pyrlandii.]()

Dzień Czytania Tolkiena w Poznaniu

.

Uniwersytet

Uniwersytet im. Adama Mickiewicza w Poznaniu jest jednym z najlepszych ośrodków akademickich w Polsce i uczelnią badawczą. Na renomę uniwersytetu wpływa tradycja, wybitne osiągnięcia naukowe kadry akademickiej, atrakcyjny program kształcenia studentów i doskonała baza lokalowa. Misją uniwersytetu jest prowadzenie wysokiej jakości badań, kształcenie we współpracy z otoczeniem społeczno-gospodarczym oraz rozwijanie społecznej odpowiedzialności. Uniwersytet stanowi centrum wiedzy, w którym badania i nauczanie wzajemnie się przenikają.

- 204 kierunki i specjalności

- 3 najlepszy uniwersytet w Polsce

- 100 ponadstuletnia tradycja

.

Poznaj nas

-

![Uniwersytet otwarty]()

Uniwersytet Otwarty

Jedna z inicjatyw UAM, w ramach której regularnie oferowane są kursy i szkolenia dla wszystkich chętnych, bez względu na poziom wykształcenia.

-

![Uniwersytet Zaangażowany]()

Uniwersytet Zaangażowany

Zobacz wkład UAM w rozwój regionu, kraju i środowiska.

-

![Sklep UAM]()

Sklep UAM

Sklep internetowy UAM to wszystkie artykuły promocyjne w jednym miejscu. W asortymencie m.in. uniwersyteckie gadżety, ubrania, artykuły biurowe, torby, czapki i kubki.

-

![Wyszukiwarka pracowników i jednostek]()

Wyszukiwarka pracowników i jednostek

Komfortowe wyszukiwanie danych o pracownikach UAM i jednostkach UAM.

-

![Logotyp ŻU]()

Życie Uniwersyteckie

Oficjalny miesięcznik UAM, w którym znajdziesz m.in. artykuły, wywiady z naukowcami oraz wiele ciekawych informacji dotyczących naszej uczelni

-

![Ogrody i pałace UAM]()

Ogrody i pałace UAM

Zobacz nasze ciekawe obiekty

.

Badania naukowe

Uniwersytet im. Adama Mickiewicza w Poznaniu to jeden z najlepszych ośrodków naukowych w kraju. Od 2019 r. należy do prestiżowego grona uczelni badawczych. Na UAM badania prowadzone są w kilkudziesięciu dyscyplinach naukowych. Obecnie realizowanych jest 586 projektów badawczych, których łączna wartość budżetów przekroczyła 1,3 mld zł. Rezultaty wcześniejszych badań zaprezentowano w 4727 publikacjach, w formie książek, monografii, artykułów w czasopismach ogólnopolskich i światowych.

- 586 projektów badawczych

- 1,3 mld zł na badania

- 4727 publikacji naukowych rocznie

.

Studiuj na UAM!

Na UAM studiuje ponad 29 000 osób. Dołącz do nich! Odwiedź nasz Blog. Zobacz serwis dla Kandydatów. Uniwersytet oferuje również studia podyplomowe i kształcenie na poziomie doktorskim. Sprawdź serwis dla studentów. Dowiedz się więcej o życiu studenckim na UAM. Wykorzystaj wszystkie możliwości, które dostajesz dzięki studiowaniu na Uniwersytecie im. Adama Mickiewicza w Poznaniu.

- 2860 miejsc w akademikach

- 141 kierunków studiów

- 50 studiów podyplomowych

.

Współpraca

Na UAM studiuje ponad 29 tysięcy osób, a ponad 5 tysięcy pracuje. Współpracujemy z wieloma instytucjami w Polsce i na świecie. Jeśli chciałbyś dołączyć do nas, zobacz aktualne oferty pracy, a także ogłoszone zamówienia publiczne.

- 0,7 mld zł budżetu

- 3016 nauczycieli akademickich

- 338 uczelni partnerskich

.

-

IEP-evaluated-logo600.png

IEP-evaluated-logo600.png -

Europe.png

Europe.png -

Logo-Small-size@4x.png

Logo-Small-size@4x.png